Although Intel doesn’t appear to be sampling their budget oriented CPUs to the press, we’ve bought the entire bunch for testing and today we’ve got the new Core i3-13100 on hand, while we also prepare for testing the Core i5-13400 and 13500, which we plan to review soon. But for now we wanted to look at the Core i3 model as the previous version, the i3-12100 was one of our favorite budget CPUs, so we’re keen to see what this updated 13th-gen version has to offer.

While Intel claims the Core i3-13100 is based on the Raptor Lake architecture, in reality it’s little more than a rebadged 12100 with a small bump to the clock speeds. The L2 cache capacity, for example, is just 1.25 MB per core while most Raptor Lake CPUs are meant to offer 2 MB per core, so calling the 13100 a Raptor Lake processor is a bit disingenuous, and we suppose calling it a 13th-gen part is as well.

In short, when compared to the 12100, the 13100’s base clocks have been boosted by 100 MHz and the Turbo clocks by 200 MHz, other than that they’re identical chips. Both pack 5 MB of L2 cache, 12 MB of L3 cache, a max turbo power of 89 watts, support for DDR5-4800 or DDR4-3200 memory by default along with 16 lanes of PCI Express 5.0 and 4 lanes of PCIe 4.0.

We’re talking about a ~5% increase in clock speeds and that’s it.

The Core i3-13100 currently costs $150, whereas the 12100 can be had for $133, making the newer version 13% more expensive.

With all of that in mind, you might be wondering why we’re bothering to test the 13100 at all and initially we thought we’d skip it. But having recommended the Core i3-12100 for the better part of 2022 and with so much movement in pricing for these lower end parts, we thought it was a segment that warranted a revisit, so here we are.

Rather than going through the standard review format however, we thought a GPU scaling benchmark would be far more interesting. So for direct comparison we have the Ryzen 5 5600 and Ryzen 5500, along with the original Core i3-12100.

When the Core i3-12100 arrived there was essentially no competition, AMD had the Ryzen 3 3100 at $175 which was a ridiculous price for that part and the Ryzen 5 5600G was even worse at $260. Back then Zen 3 was similar to what we find with Zen 4 today, everyone loves to rave about the value of Zen 3 with parts such as the 5800X3D dropping as low as $330 and the 5600 is now available for $150, but it wasn’t always that way.

A year ago neither CPU existed, and when they arrived around April, the 5600 was priced at $200. At the same time AMD introduced the Ryzen 5 5500 for $160 and since then that part has dropped to $100. The 5600 has also dropped to $150, which is the same asking price as the new 13100.

So today we have the Core i3-12100 for $133, the 13100 for $150 and these are competing with the Ryzen 5 5500 at $100 and the 5600 at $150. That’s a significantly more competitive environment than we had this time last year.

Given this is a GPU scaling benchmark, we’re obviously focusing on gaming performance, and we have a dozen titles, all of which have been tested at 1080p using the Radeon RX 6650 XT, 6950 XT and RTX 4090. The reason for testing at 1080p is simple, we want to minimize the GPU bottleneck as we’re looking at CPU performance, but by including a number of different GPUs we still get to see the effects GPU bottlenecks have on CPU performance.

As for the test systems, we’re using DDR4 memory exclusively, as the older but much cheaper memory standard makes sense when talking about sub-$200 processors, though there are certainly scenarios where you would opt for DDR5, but for simplicity sake we’re gone for an apples to apples comparison with DDR4.

We’ve decided to use G.Skill Ripjaws V 32GB DDR4-3600 CL16 memory because at $115 it’s a good quality kit that offers a nice balance of price to performance. The AM4 processors are able to run this kit at the 3600 spec using the standard CL16-19-19-36 timings. The locked Intel processors can’t run above 3466 if you want to use the Gear 1 mode, and ideally you’d want to use Gear 1 as the memory has to run well above 4000 before Gear 2 can match Gear 1.

The reason for this limitation is due to the System Agent or SA voltage is locked on Non-K SKU processors, and this limits the memory controller’s ability to support high frequency memory using the Gear 1 mode. That being the case we’ve tested the 12100 and 13100 using the Ripjaws memory adjusted to 3466, as the optimal configuration for these processors short of going and manually tuning the sub-timings, which we’re not doing for this review.

Benchmarks

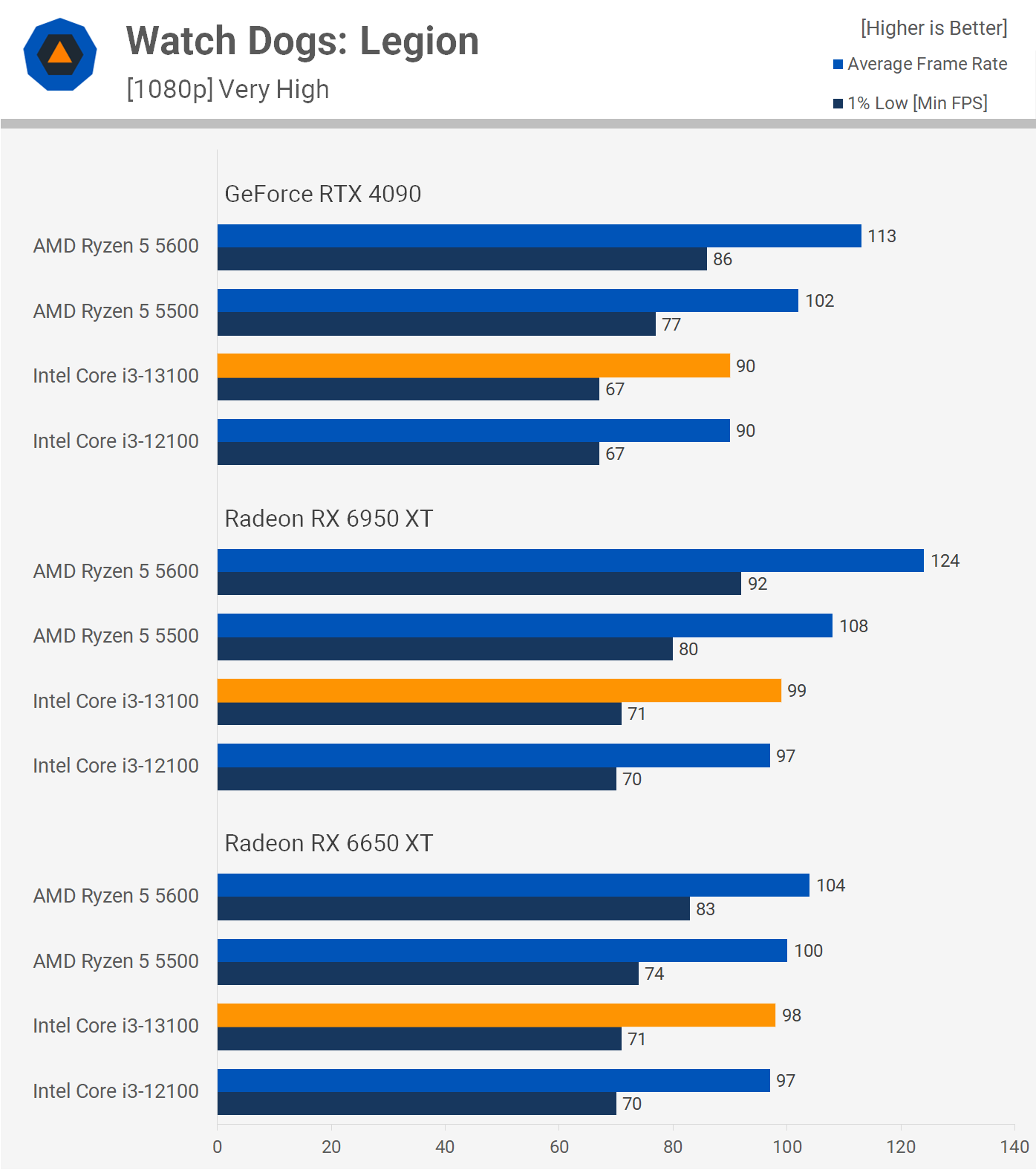

First up we have Watch Dogs: Legion and there’s a bit to discuss here. What will probably jump out first is that in a number of instances the lowly Radeon RX 6650 XT is actually faster than the RTX 4090. This was explained a while ago when we studied Nvidia driver overhead. We won’t go at length to explain this here, but in short Nvidia’s architecture is designed in a way that creates more driver overhead for the CPU, meaning that when performance is CPU limited, frame rates take a bigger hit when using a GeForce GPU opposed to a Radeon GPU.

So in some instances a much lower end product like the 6650 XT can actually end up faster than the RTX 4090 as the CPU simply can’t keep up. Of course, you’d never pair a Core i3-13100 with an RTX 4090, but the same effect can be observed with lower end GeForce GPUs, but we’re not here to explore that today.

What we do want to check out is Ryzen 5 5600 and Core i3-13100 CPU performance, and when using the 6650 XT we see that the 5600 is 6% faster when comparing the average frame rate, but 17% faster when comparing 1% lows and that’s a surprisingly big margin given the GPU we’re testing with.

Upgrading to the 6950 XT blows the average out to 25% in favor of the Ryzen 5600 and 30% for the 1% lows. We’re also looking at similar margins using the RTX 4090 despite frame rates overall being lower, here the Ryzen 5600 is 26% faster than the Core i3-13100, or 28% faster when comparing the 1% lows.

The Core i3-13100 is also at most 2% faster than the i3-12100 in this testing, while the Ryzen 5 5500 comfortably beats both Core i3 processors.

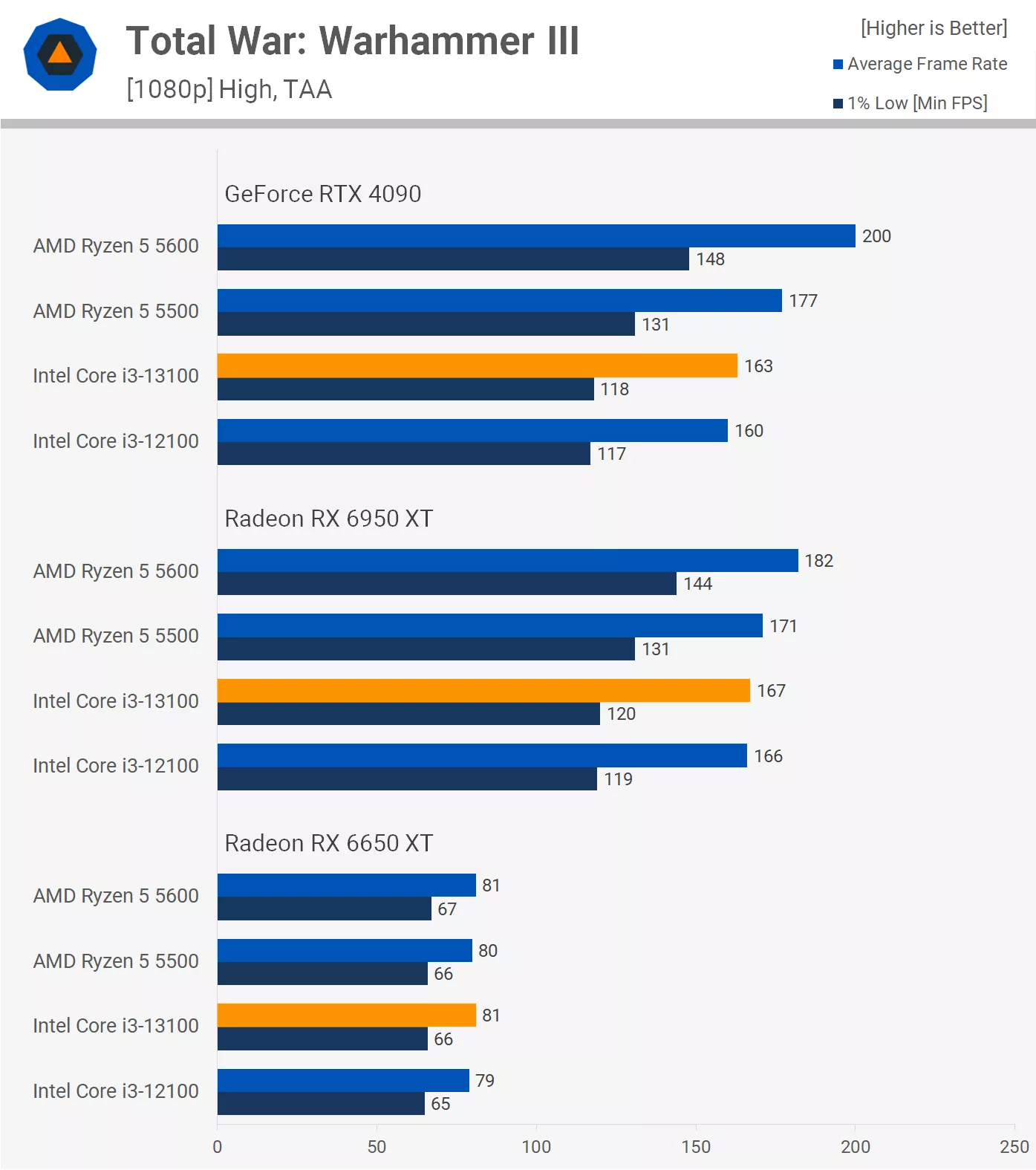

Now, Warhammer III scaling is very different to what we saw in Watch Dogs. Using the high quality preset sees the Radeon 6650 XT capped to around 80 fps and all four processors were capable of reaching those limits, so a hard GPU bottleneck.

The Radeon 6950 XT largely removes those limits and now the Ryzen 5600 is 9% faster than the Core i3-13100 when comparing the average frame rate and 20% faster for the 1% lows.

Then armed with the RTX 4090, those margins increase to 23% for the average frame rate and a 25% advantage for the Ryzen 5 when comparing the 1% lows.

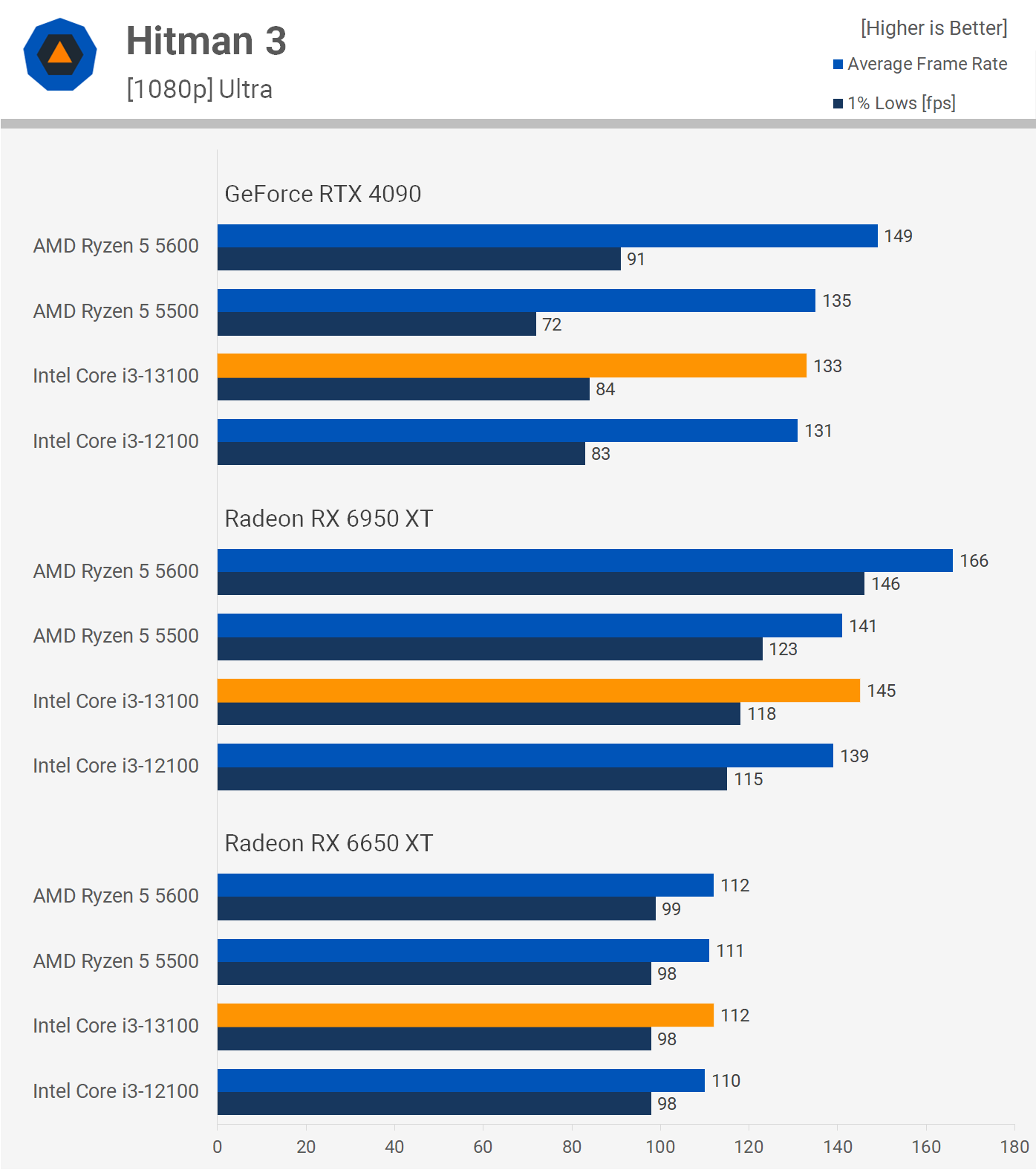

Next we have Hitman 3. First when paired with the 6650 XT all four CPUs produce similar frame rates at around 110 fps. However, with the 6950 XT the margins are quite different, the Ryzen 5500 roughly matched the Core i3s, but the Ryzen 5600 was 14% faster than the i3-13100 and a massive 24% faster when comparing 1% lows.

Despite that, the margins close up with the RTX 4090 again, while 1% lows tank, presumably due to the additional overhead, but this could also just be an issue with the GeForce drivers. In any case, now the Ryzen 5600 is 12% faster than the Core i3-13100 when comparing average frame rates and just 8% faster when comparing 1% lows.

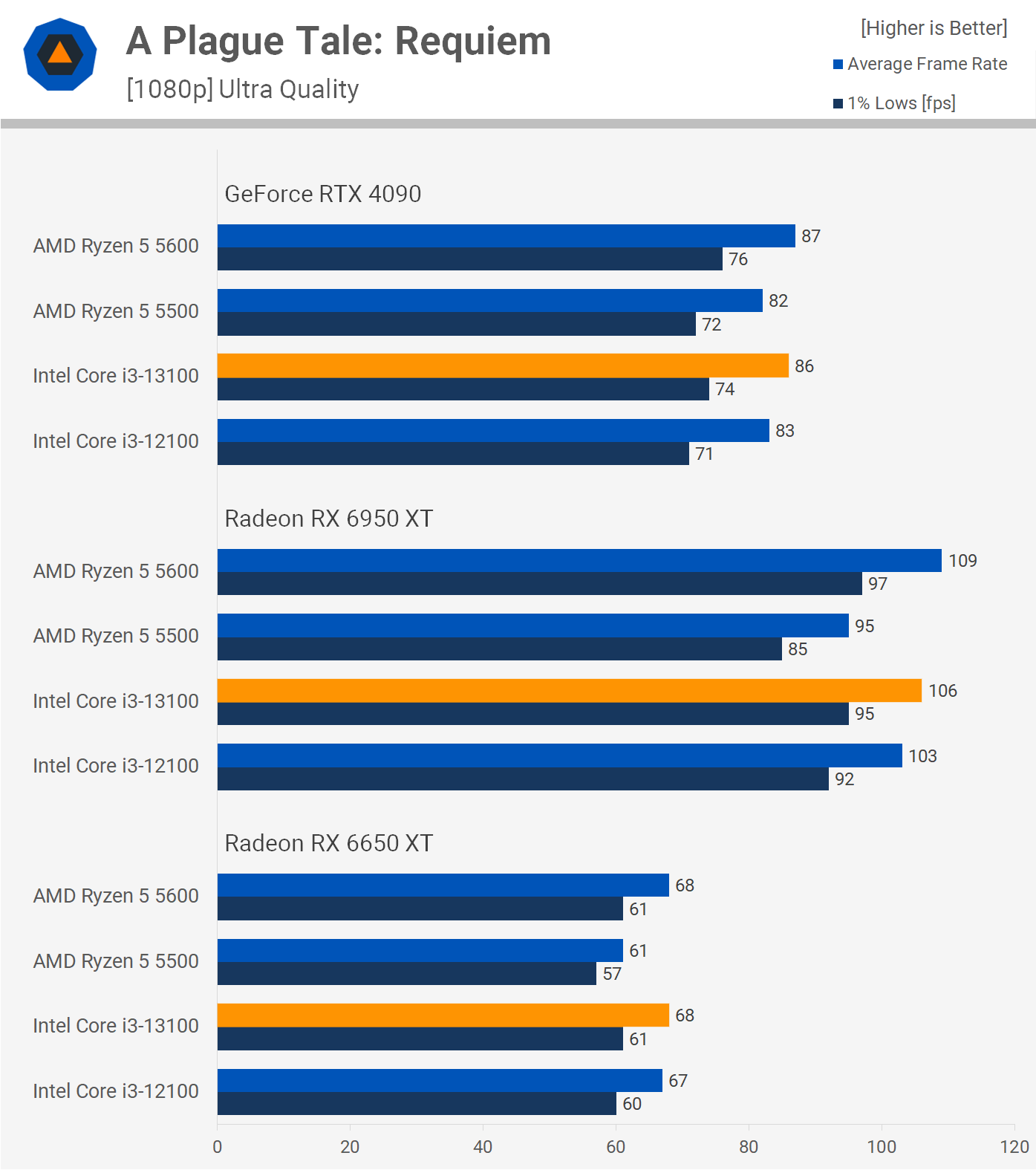

A Plague Tale: Requiem is both GPU and CPU demanding, though here we are using the ultra quality settings for this single player story-driven title and as a result the Radeon 6650 XT is only good for just over 60 fps. The Ryzen 5 5500 does struggle in this title and there seems to be a latency penalty to the much smaller L3 cache.

Again, with these lower end processors the Radeon 6950 XT is much faster than the GeForce RTX 4090 at 1080p, and in fact the 5600 was 25% faster using the Radeon GPU, pretty crazy stuff, but not surprising given what we’ve learned in past benchmarks. In terms of CPU performance though, the Ryzen 5 5600 and Core i3-13100 appear evenly matched in this title.

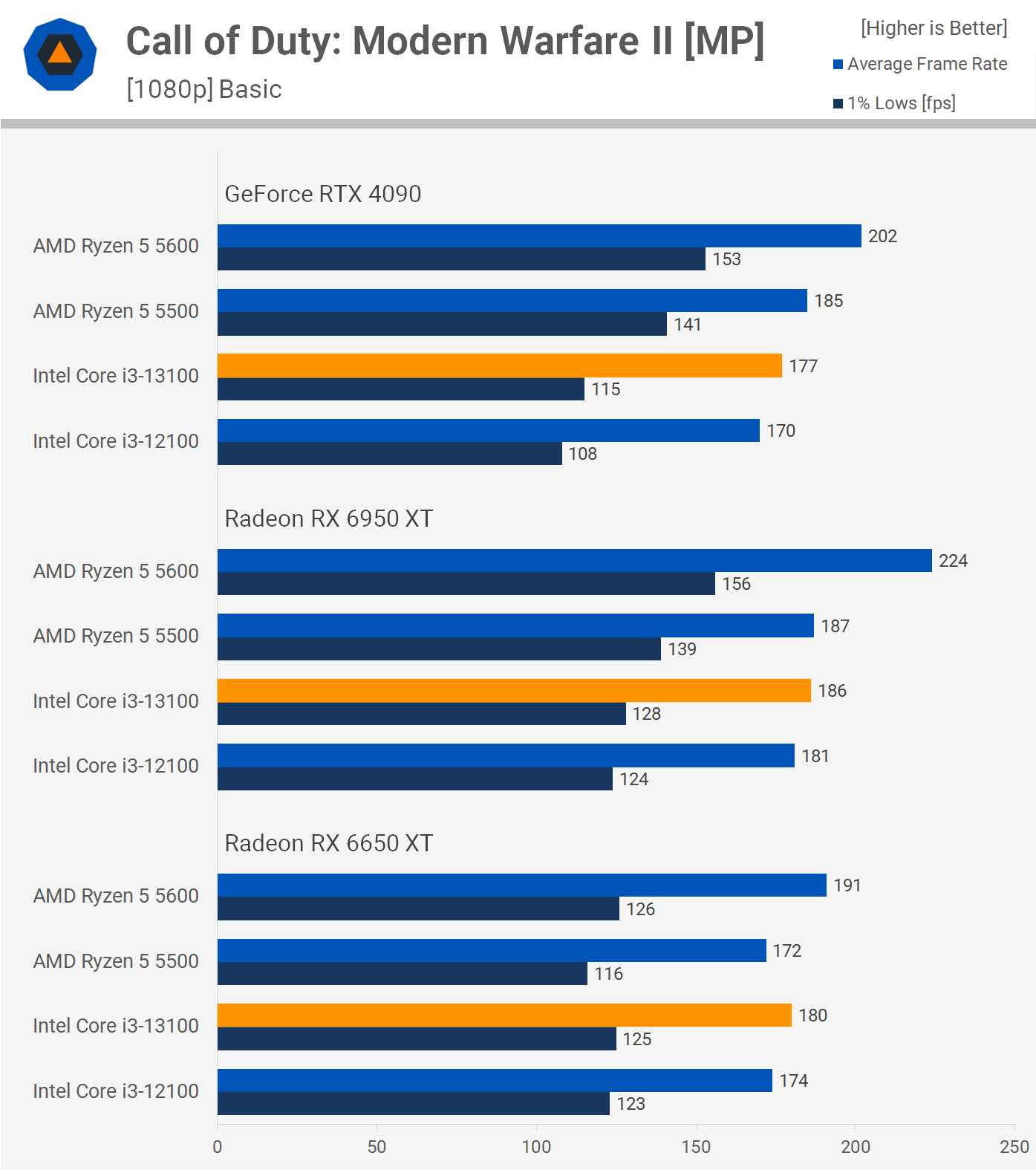

Next up we have CoD Modern Warfare II multiplayer. When using the Radeon 6650 XT performance is similar across all four processors, the Ryzen 5600 did nudge ahead for the average frame rate, but it was only 6% faster than the Core i3-13100, so not a big margin.

The Radeon 6950 XT hands the Ryzen 5600 a 20% win when looking at the average frame rate and 22% for the 1% lows when compared to the i3-13100.

That’s interesting because when using the RTX 4090, the Ryzen 5600 was 14% faster than the Core i3-13100 for the average frame rate, but a massive 33% when comparing 1% lows. So the additional overhead of the GeForce is hurting the Core i3s in this test.

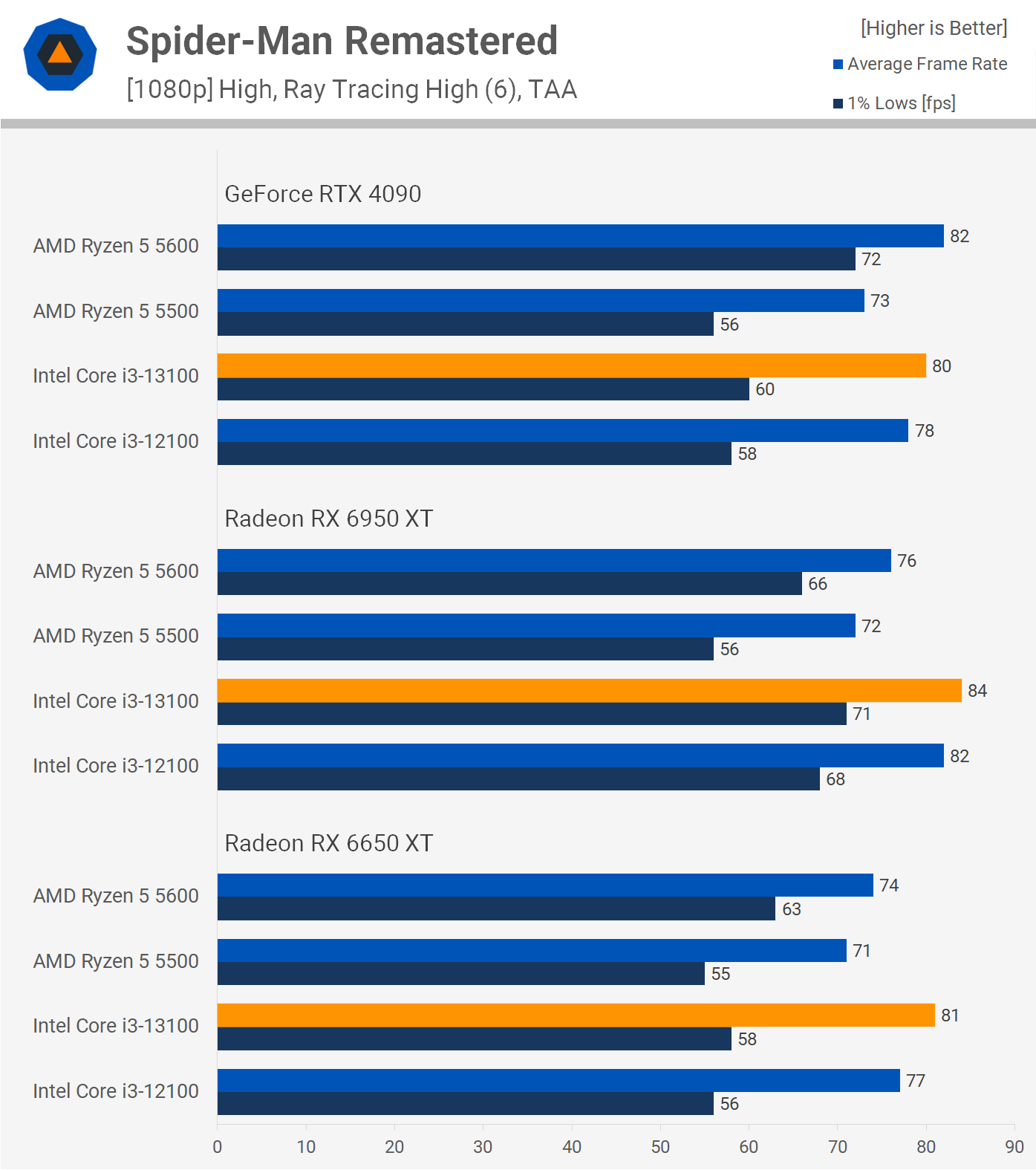

Spider-Man Remastered is CPU and GPU demanding, too, especially when using ray tracing effects like we did for this testing. These results are very CPU bound as we see little difference in performance between the three GPUs.

Quite unexpectedly, the Core i3 processors perform best with the Radeon GPUs, beating even the Ryzen 5 5600. Using the Radeon 6950 XT saw the i3-13100 beat the Ryzen 5600 by an 11% margin.

Despite that we find very different results with the RTX 4090, here the GeForce GPU cripples the 1% lows of the Core i3s, handing the Ryzen 5600 a 20% performance advantage.

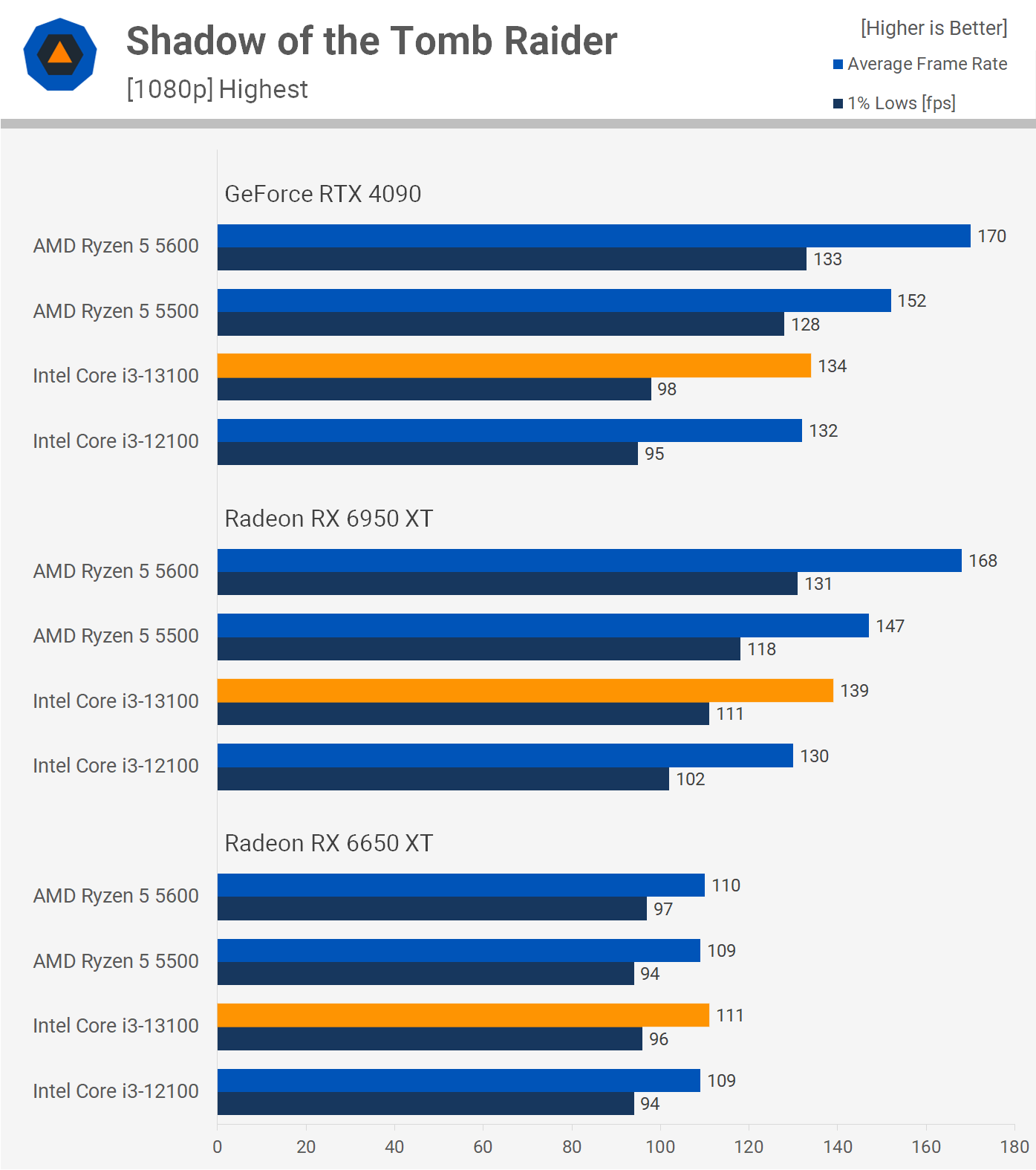

Moving on to a benchmark classic, Shadow of the Tomb Raider. Somehow this is still one of the most CPU demanding single player games we have to test with and it was released back in 2018. To be clear, the built-in benchmark isn’t that CPU demanding and isn’t suitable for such testing, it’s a GPU benchmark, so therefore we’re testing in the village section which is much more demanding on the CPU side.

Here the Radeon 6650 XT capped out at around 110 fps using the highest quality preset and all four CPUs were able to achieve that performance target. However, going beyond that with the 6950 XT saw the Core i3s left behind and now the 5600 is up to 21% faster than the 13100, so an easy win here for AMD.

Then with the RTX 4090 which increased CPU load further, the Ryzen 5600 is 27% faster than the 13100, or a massive 36% faster when comparing 1% lows.

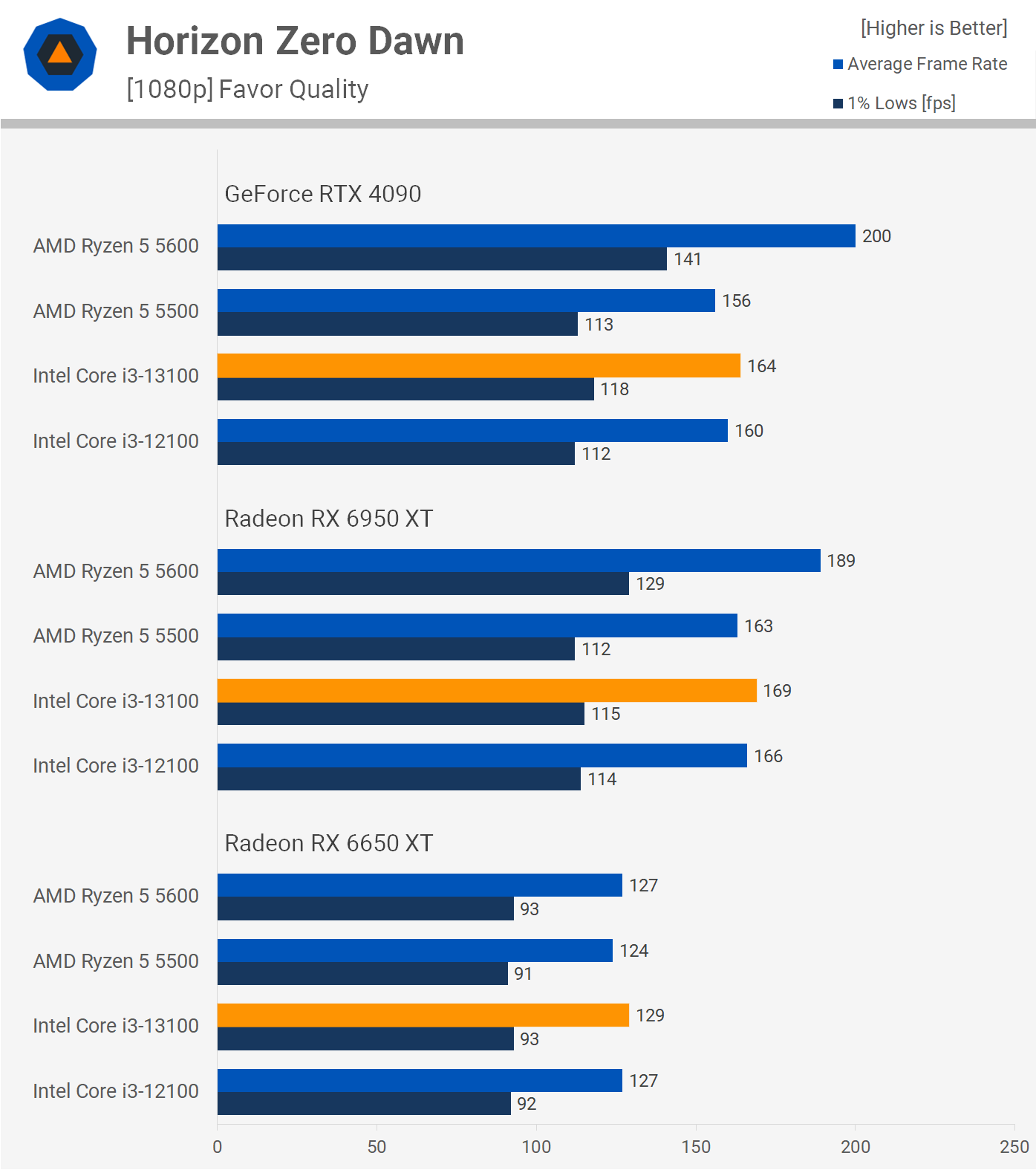

Horizon Zero Dawn shows all four CPUs maxing out the Radeon 6650 XT at between 120-130 fps. But when we moved to the Radeon 6950 XT, the 12100, 13100 and 5500 all delivered around 160 fps and the Ryzen 5600 went on to render 189 fps. That made it 12% faster than the i3-13100 and that margin grew to 22% with the RTX 4090.

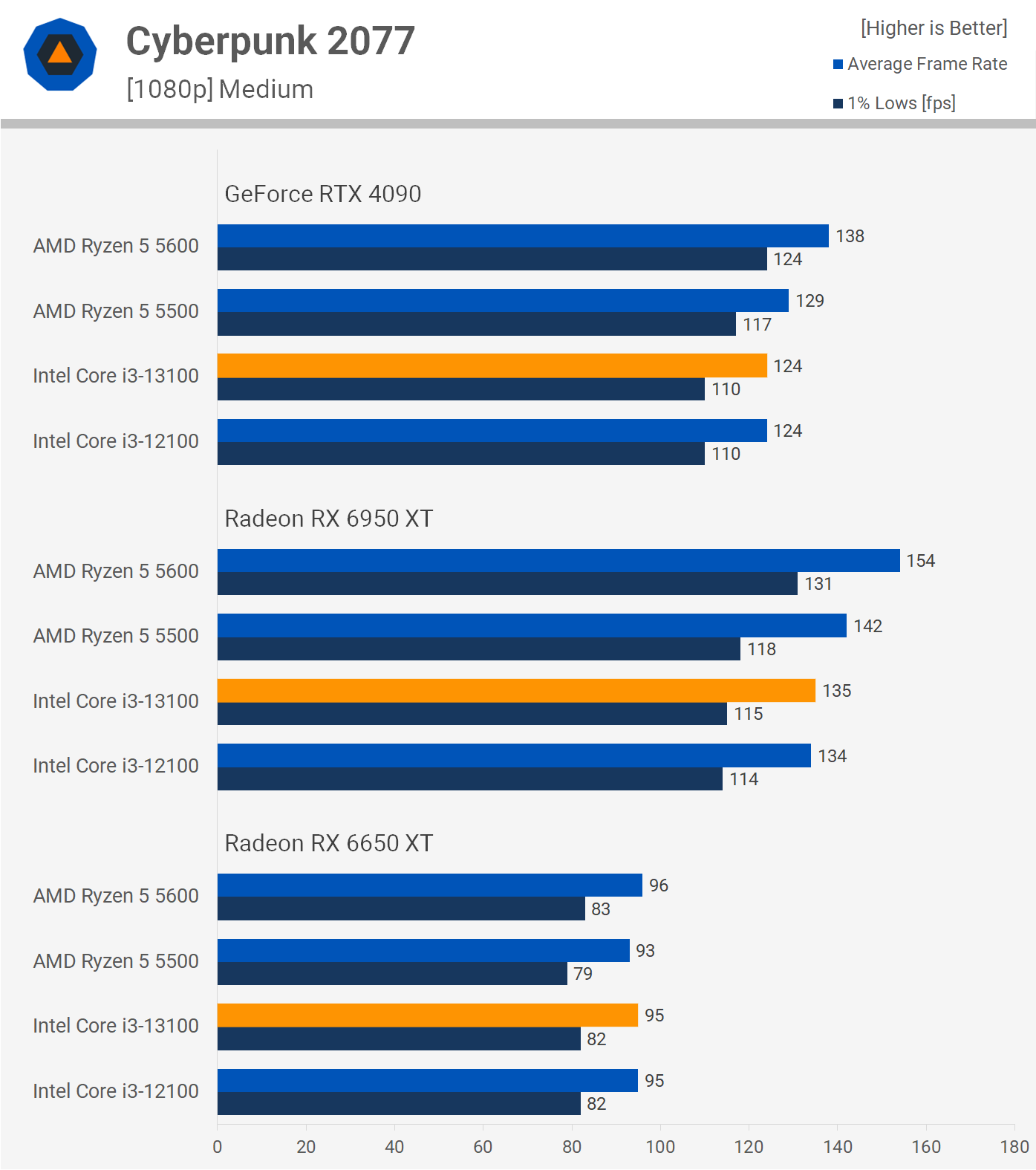

Next up we have Cyberpunk 2077 and this game is very GPU bound with the Radeon 6650 XT, even when using the medium quality settings. Once again due to the overhead issue all four CPUs actually delivered higher performance with the Radeon 6950 XT opposed to the RTX 4090, the Ryzen 5600 for example was 12% faster using the Radeon GPU. It was also 15% faster than the Core i3-13100, so another comfortable win here for the Zen 3 processor.

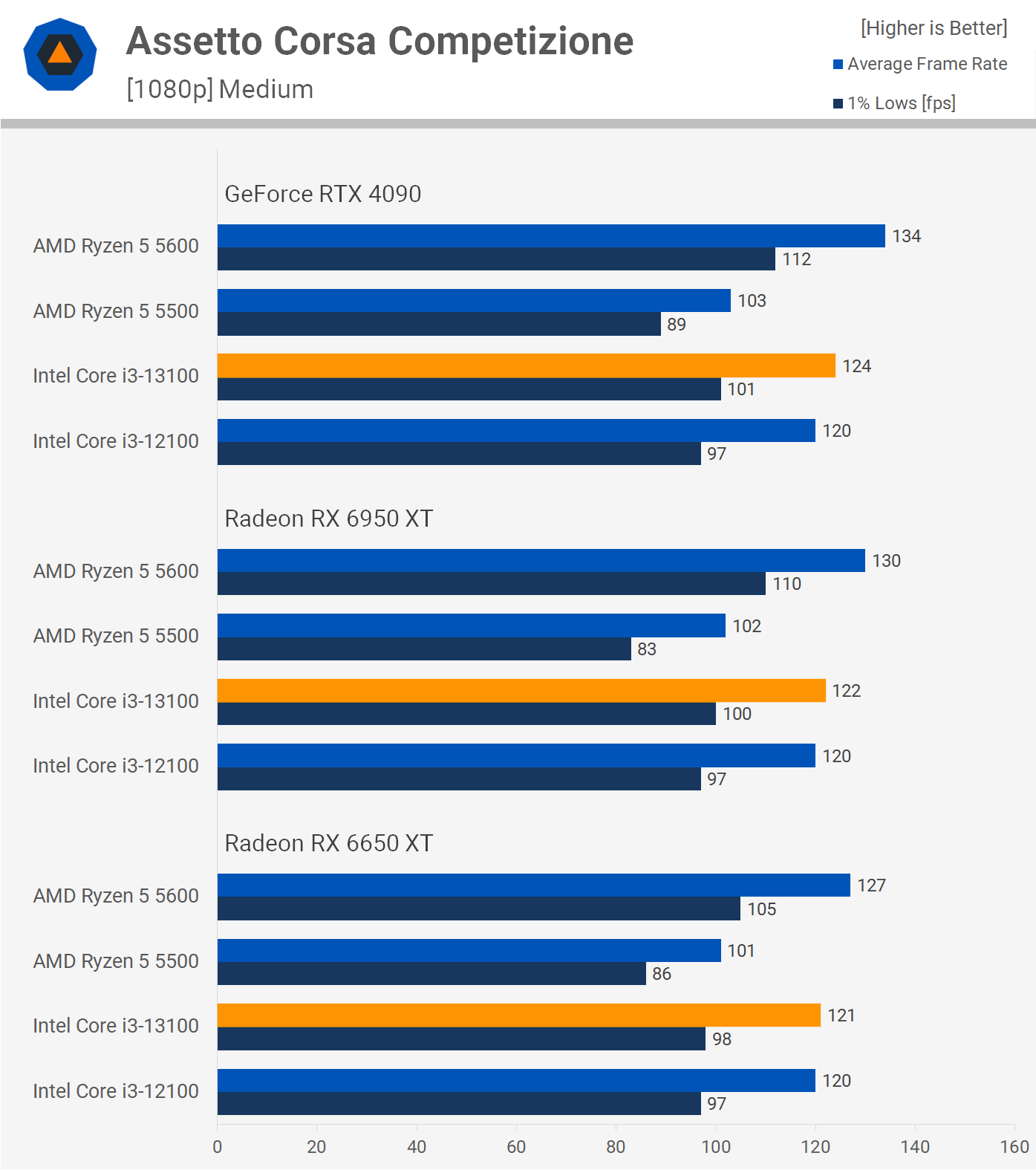

Assetto Corsa Competizione is a CPU demanding driving simulator. We’re using the medium quality preset which typically makes this title far more CPU than GPU bound using modern hardware.

As a result frame rates are almost identical using either the Radeon 6650 XT or 6950 XT. Using the faster of the two Radeon GPUs, the Ryzen 5600 was just 7% faster than the 13100, while the Ryzen 5500 was nowhere. L3 cache performance is very important in this title, which is why the 5500 struggles and parts like the 5800X3D really shine.

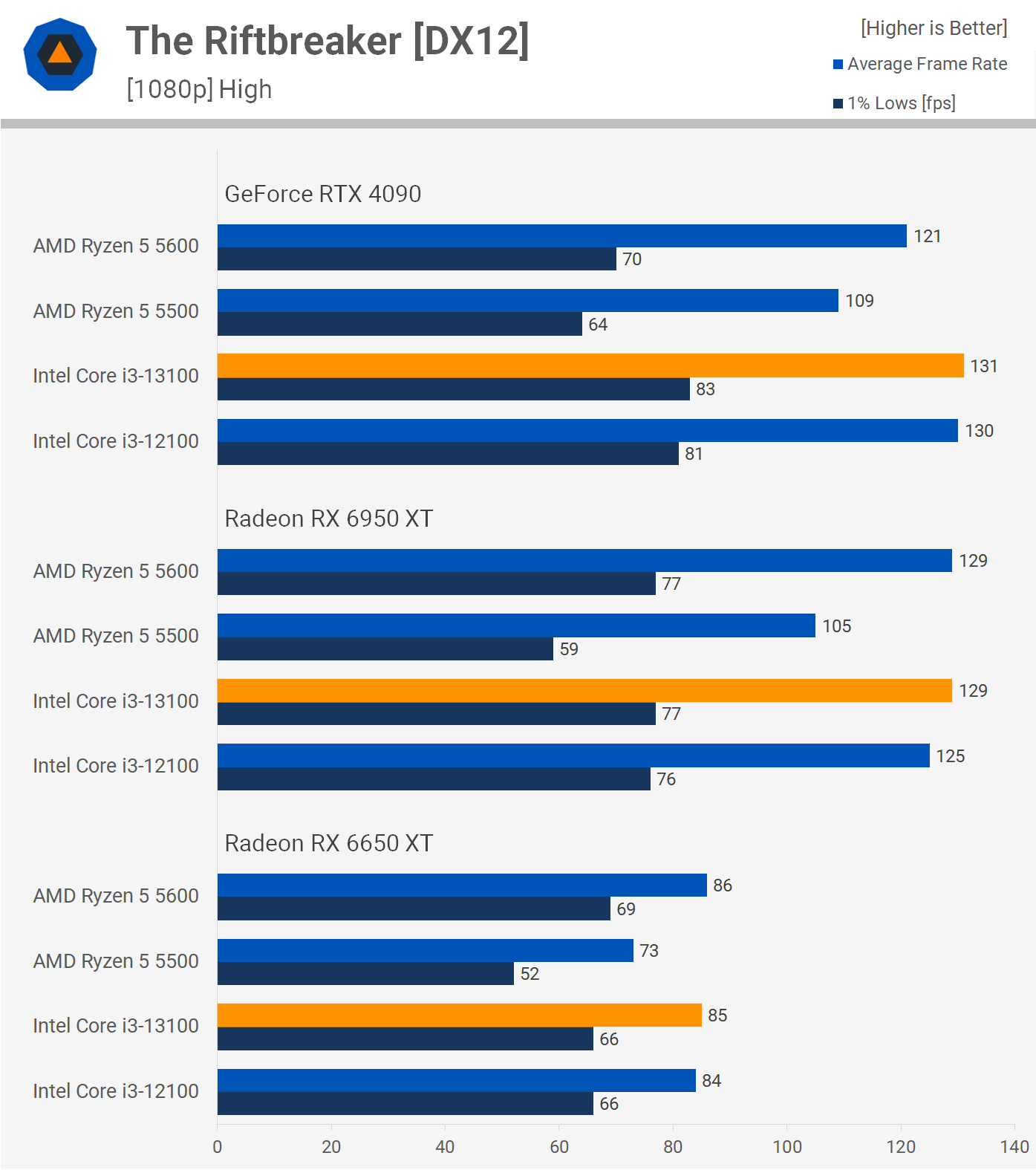

The Riftbreaker is a very CPU demanding game, though like most it relies heavily on the primary thread, so single core performance is important and we see this when comparing the Ryzen 5600 and Core i3-13100. The Ryzen 5500 also struggles as IPC is down thanks to the much smaller L3 cache.

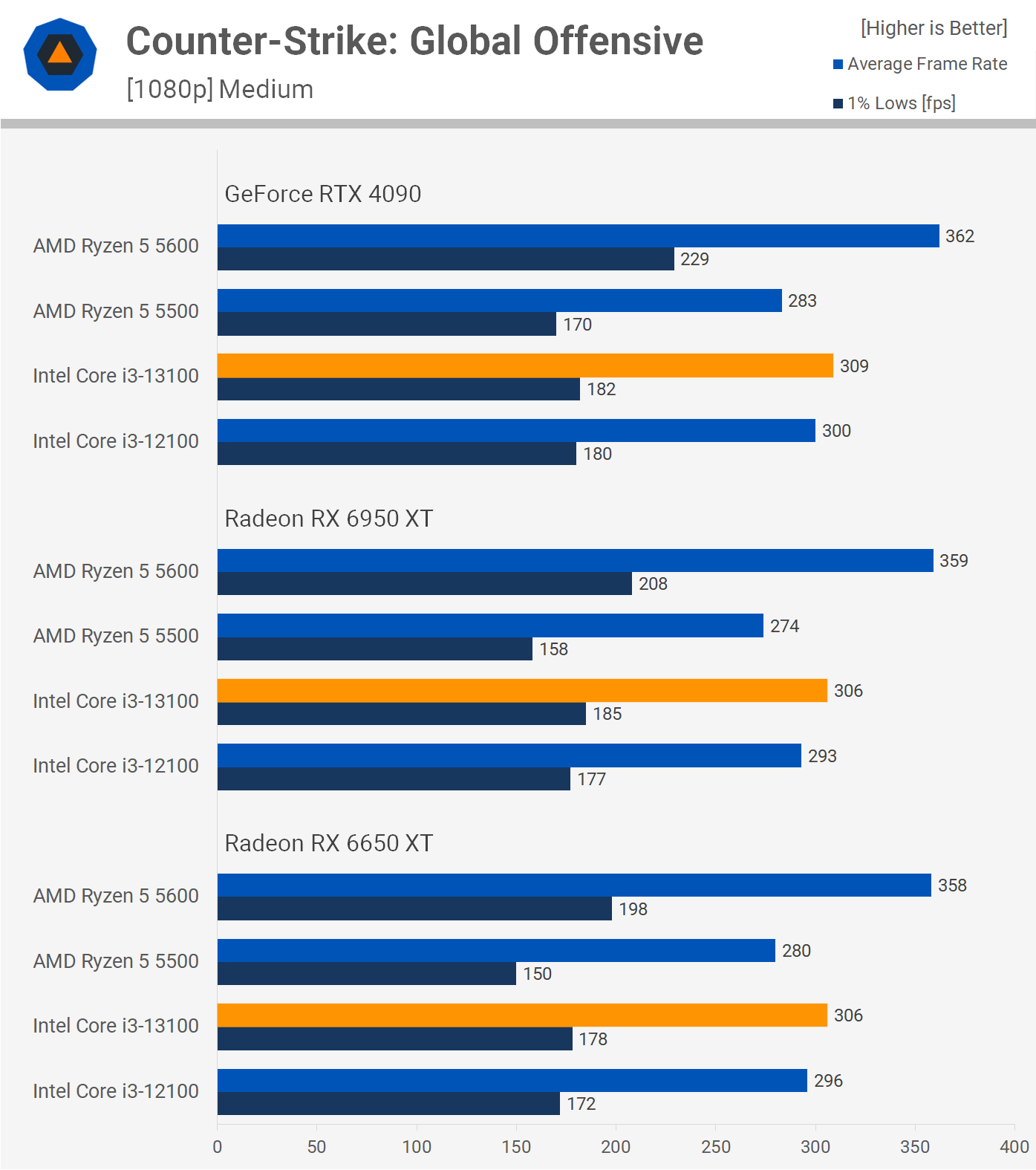

Counter-Strike is heavily CPU limited as visually the game hasn’t been overhauled in a long time. Using the Radeon 6650 XT, the Ryzen 5600 was 17% faster than the Core i3-13100 or 11% faster when comparing 1% lows.

The Ryzen 5500 was the slowest of the four CPUs tested with the i3-13100 up to 19% faster. Still, for the most part performance was similar between the 5500 and the Core i3s.

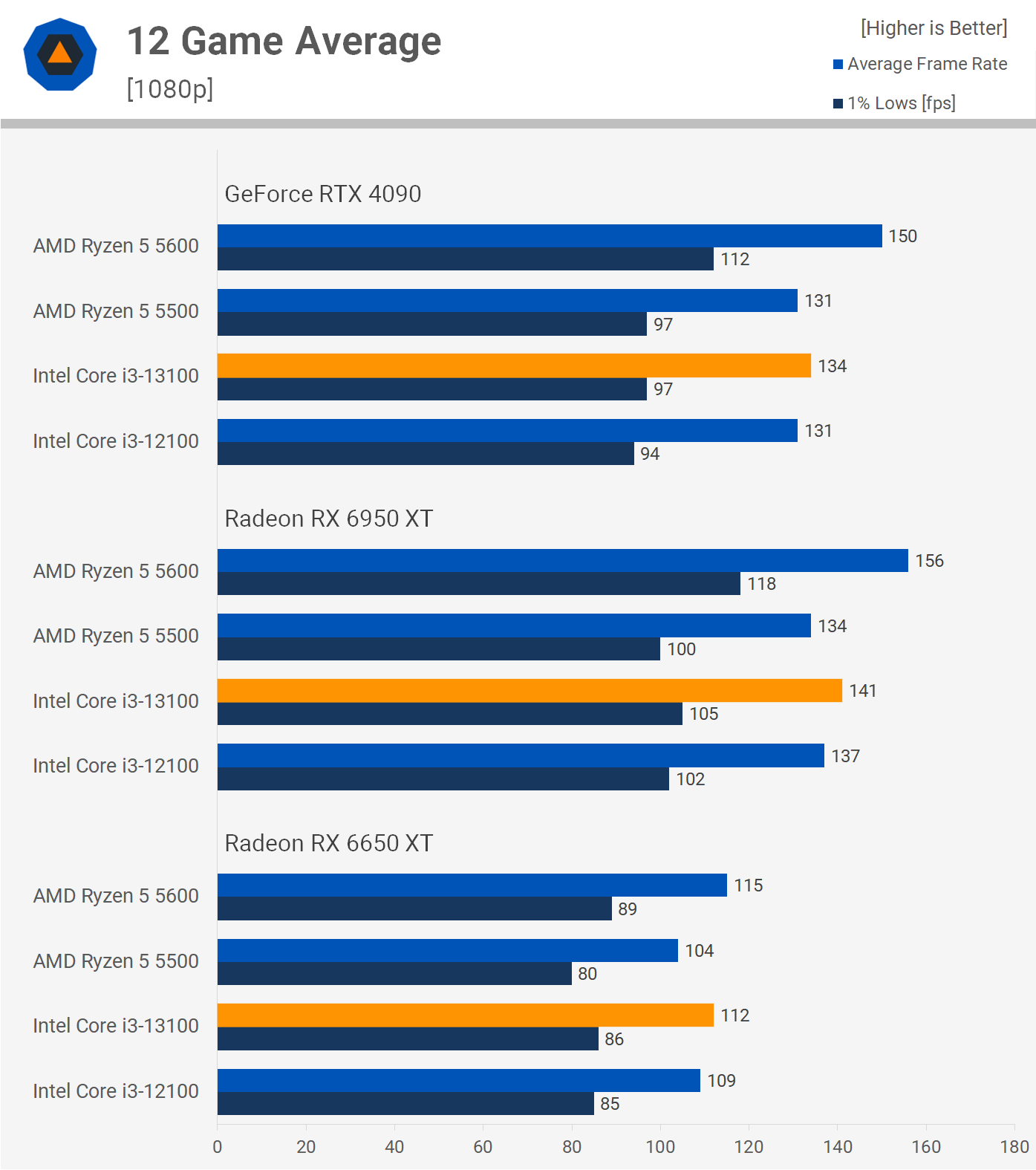

12 Game Average

Here’s the 12 game average and as we often saw when using the Radeon 6650 XT performance was similar across all four CPUs due to the GPU bottleneck, but there was some variance, mostly for the Ryzen 5500. The Ryzen 5600 was on average just 3% faster than the Core i3-13100, and anything within 5% we deem a tie.

Largely removing the GPU bottleneck with the Radeon 6950 XT sees the Ryzen 5600 pull ahead of the Core i3-13100 by an 11% margin, or 12% when comparing 1% lows.

That’s a decent performance advantage and we saw similar margins with the GeForce RTX 4090.

What We Learned

It’s amazing how much the landscape of PC parts can change in less than a year. AMD went from being the king of budget CPUs a few years ago, to nowhere about a year ago, and now they’re back to being the obvious choice.

To be fair, we’ve been a bit slow on this update, the Ryzen 5 5600 dropped as low as $150 back in August, though it rose back up to $190 for a few months before plummeting as low as $120. As of writing, it’s selling for $150, and with the Core i3-13100 entering the market at that price this is an easy win for AMD.

It’s worth noting that the 13100F can be had for $125, while the 12100F is selling for as little as $110, so those F SKU are decent options at those prices, but if you want to save as much money as possible the Ryzen 5 5500 is hard to beat for $100.

If you go with an AMD AM4 platform you have the option of upgrading to the 5800X3D in the future for a massive FPS boost. Right now that CPU is selling for $340. Meanwhile, the Core i3s can also be upgraded later to the 13600K for similar money and the i5 offers similar gaming performance to the 3D V-Cache part. So although both platforms are « dead, » there are plenty of solid upgrade options already on offer.

For the Core i3-13100 to compete effectively with the Ryzen 5 5600 it needs to cost no more than $100, then it would be a toss up between the i3 and the 5500. At $150 however it’s dead on arrival and even $125 for the F-SKU is too much to ask in our opinion.

As for GPU scaling benchmark results, we saw some interesting numbers yet nothing out of the ordinary given past findings. We know some of you were wishing the Radeon 7900 XTX was included, but truth be told, for sub-$200 parts these uber expensive GPUs are only used for scientific testing and we guess future-proofing to a degree as well.

If you enjoyed this type of article, know we’ll be doing more of it over the coming weeks and months. Adding the Ryzen 5 7600 to the mix could be really interesting, so let us know what you think about this potential matchup and any other you may be looking to see tested by us.